Shanghai ZENTEK Co., Ltd. ZENTEK 信弘,智能,信弘智能科技 Elite Partner,Omniverse,智能科技 NVIDIA GPU,NVIDIA vGPU,TESLA,QUADRO,AI, AI Training,AI Courses,Artificial Intelligence (AI),Solutions,DLI,Mellanox,InfiniBand (IB),Deep Learning, NVIDIA RTX,IT,RACLE Database,ORACLE Cloud Services,Deep Learning Institute, bigdata,Big Data, Data Security & Backup,鼎甲SCUTECH CORP,High-Performance Computing (HPC),Virtual Machines (VM), Virtual Desktop Infrastructure (VDI),Virtual Desktop Infrastructure (VDI),Hardware,Software, Accelerated Computing,High-Performance Computing (HPC),Supercomputing,Servers,Virtual Servers, IT Consulting,IT System Planning, Application Deployment,System Integration

GPU-Enabled Container Cloud Platform – Kube Manager

Empowering Digital Hubs with Unified Compute Resource Management

In environments with a high number of GPU users, the lack of unified GPU resource allocation and management often leads to imbalanced usage, resource idling, or contention, resulting in low overall utilization. Moreover, when multiple developers share a single machine, differences in environments and workflows can cause conflicts and inefficiencies.

Kube Manager, as a container-based cloud platform, provides unified scheduling and allocation of large-scale CPU and GPU resources. It enables dynamic, on-demand GPU allocation to prevent resource imbalance, supports GPU sharing (allowing multiple users to utilize a single GPU card concurrently), and eliminates contention. Leveraging containerization, Kube Manager ensures environment isolation so that multiple users can share the same machine without conflicts.

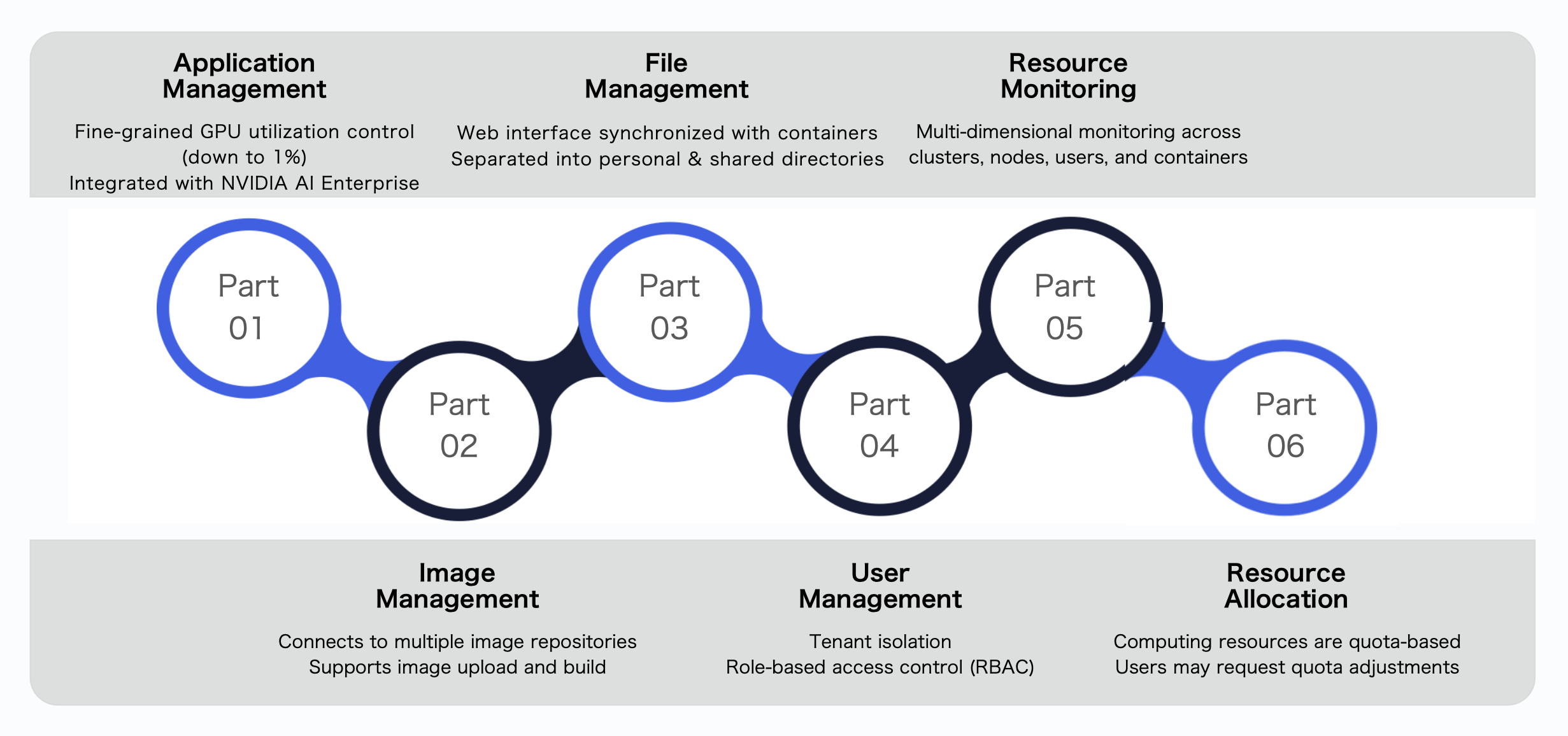

Kube Manager comprises six core modules: Resource Monitoring, Application Management, Image Management, File Management, User Management, and Resource Quotas.

Advantages of Kube Manager

1. Convenient Resource Configuration

- Quickly select required CPU, memory, GPU, and VRAM when creating applications.

- Enforced quotas prevent overuse; quotas can be adjusted as needed.

- CPU allocation granularity: 1‰ of a core; Memory: 1 MB.

2. Efficient Application Deployment

- Simplified operations: select an image and resources to create applications in minutes.

- Advanced options include environment variables and startup commands.

- Automatic NFS mounting ensures persistent storage and fast file access.

3. Flexible GPU Scheduling

- Options include full GPU allocation, MIG (Multi-Instance GPU) partitioning, or percentage-based allocation.

- Percentage allocation supports as low as 1% utilization or 0.25 GB of VRAM.

4. Multi-Dimensional Monitoring

- Cluster, node, user, and container-level monitoring.

- Detailed visualization of CPU, memory, network, and GPU usage.

- Customizable dashboards for advanced monitoring.

5. Persistent File Storage

- Files stored persistently in NFS; unaffected by container lifecycle.

- Web files automatically mounted into containers for seamless access.

6. Direct Access to NVAIE

- NVIDIA AI Enterprise (NVAIE) is an end-to-end, cloud-native suite of pretrained AI models and data analytics tools.

- Certified by NVIDIA with global enterprise support, ensuring rapid AI deployment. Note: Commercial usage requires a separate license purchase.

Case Study

Shanghai Jiao Tong University, School of Mathematical Sciences

Kube Manager provides diverse GPU scheduling options—including full GPU, MIG partitioning, and percentage-based allocation—allowing the department to unify resource management across servers, resolve conflicts, and improve utilization.

Nanjing Agricultural University, School of Artificial Intelligence

Using Kube Manager, faculty and students met diverse resource requirements. GPUs were almost fully utilized across more than ten simultaneous instances, boosting compute efficiency, task throughput, and shortening model development cycles.

Zhejiang University, Shanghai Institute for Advanced Study

Kube Manager enforces quotas and resource limits to prevent excessive usage and waste. With queue-based scheduling and utilization of idle time (night/weekends), the institute improved both resource efficiency and model training throughput.