Shanghai ZENTEK Co., Ltd. ZENTEK 信弘,智能,信弘智能科技 Elite Partner,Omniverse,智能科技 NVIDIA GPU,NVIDIA vGPU,TESLA,QUADRO,AI, AI Training,AI Courses,Artificial Intelligence (AI),Solutions,DLI,Mellanox,InfiniBand (IB),Deep Learning, NVIDIA RTX,IT,RACLE Database,ORACLE Cloud Services,Deep Learning Institute, bigdata,Big Data, Data Security & Backup,鼎甲SCUTECH CORP,High-Performance Computing (HPC),Virtual Machines (VM), Virtual Desktop Infrastructure (VDI),Virtual Desktop Infrastructure (VDI),Hardware,Software, Accelerated Computing,High-Performance Computing (HPC),Supercomputing,Servers,Virtual Servers, IT Consulting,IT System Planning, Application Deployment,System Integration

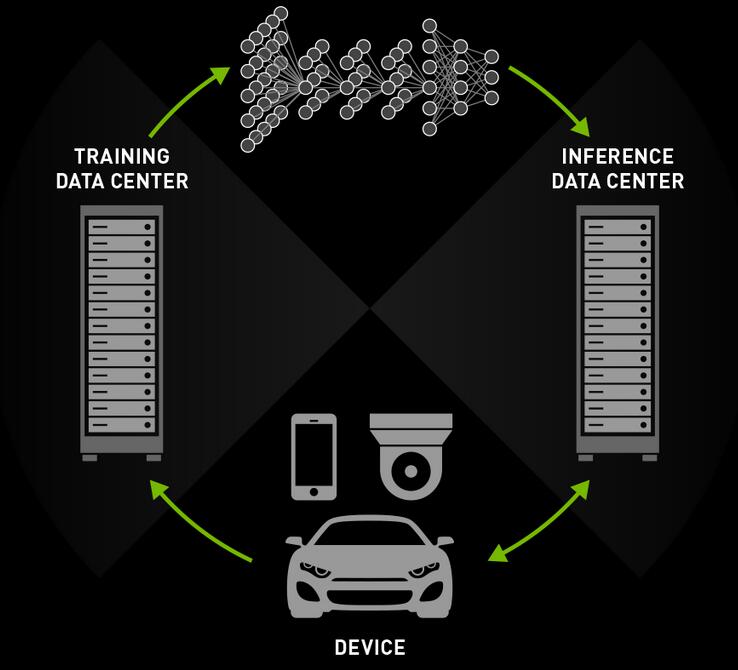

Deep Learning Across Data Centers, Cloud, and Edge Devices

Both deep learning training and inference rely on GPUs. From data centers and desktops to laptops and supercomputers, NVIDIA GPU acceleration is everywhere you need it.

With deep learning and AI models, computers can learn autonomously.

Training

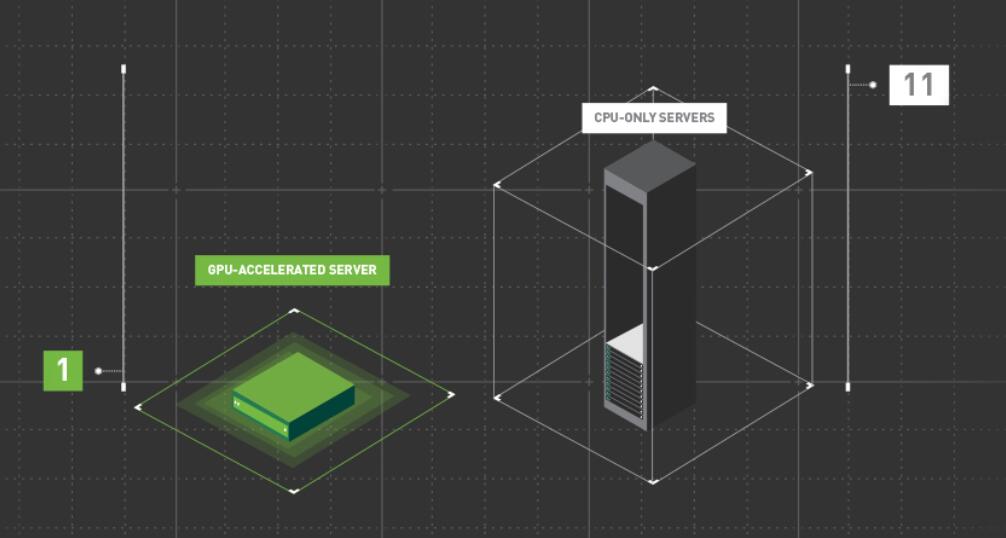

Accelerating model training is key to improving data scientists’ productivity and delivering AI services faster. Servers equipped with NVIDIA® Tesla® V100 or P100 GPUs can reduce training time for complex models from months to hours.

Inference

Inference is where trained neural networks deliver value. For emerging services such as image recognition, speech, video, and search, inference forms the backbone. Compared to CPU-only servers, GPU-powered servers deliver up to 27× higher inference throughput, dramatically reducing costs.