Shanghai ZENTEK Co., Ltd. ZENTEK 信弘,智能,信弘智能科技 Elite Partner,Omniverse,智能科技 NVIDIA GPU,NVIDIA vGPU,TESLA,QUADRO,AI, AI Training,AI Courses,Artificial Intelligence (AI),Solutions,DLI,Mellanox,InfiniBand (IB),Deep Learning, NVIDIA RTX,IT,RACLE Database,ORACLE Cloud Services,Deep Learning Institute, bigdata,Big Data, Data Security & Backup,鼎甲SCUTECH CORP,High-Performance Computing (HPC),Virtual Machines (VM), Virtual Desktop Infrastructure (VDI),Virtual Desktop Infrastructure (VDI),Hardware,Software, Accelerated Computing,High-Performance Computing (HPC),Supercomputing,Servers,Virtual Servers, IT Consulting,IT System Planning, Application Deployment,System Integration

Developing using the NVIDIA Isaac for Healthcare remote surgery workflow

Remote surgery is no longer a futuristic concept—it is playing an increasingly vital role in healthcare services. By 2030, the global shortage of surgeons is projected to reach 4.5 million, with remote hospitals facing even greater challenges in attracting specialists. Against this backdrop, the technology that enables experts to perform surgeries remotely is transitioning from experimental exploration to an inevitable trend. This shift is primarily driven by three key developments:

1.Mature Network Infrastructure: 5G and low-latency backbone networks now enable real-time video collaboration across continents.

2.Advanced AI and Simulation Technologies: Surgeons can now train and validate systems in highly realistic virtual environments before entering the operating room.

3.Standardized Platforms Ready for Deployment: Developers no longer need to build custom workflows for integrating sensors, video, and robotics. Instead, they can develop based on shared infrastructure, significantly accelerating the R&D process.

However, remote surgery still faces several major technical challenges:

1.Ultra-low-latency video requirements for surgical precision

2.Reliable remote robotic control with haptic feedback

3.Seamless hardware integration across diverse solutions

This is where NVIDIA Isaac for Healthcare proves its value. It provides developers with a modular remote surgery workflow ready for immediate development, covering video and sensor streaming, robotic control, haptic feedback, and simulation capabilities. Developers can adapt, extend, and deploy it for both training and clinical scenarios.

In this article, we will introduce how the remote surgery workflow operates, how to get started, and why it is the fastest way to build the next generation of surgical robots.

What is NVIDIA Isaac for Healthcare?

NVIDIA Isaac for Healthcare brings the power of three computing systems – NVIDIA DGX, NVIDIA OVX, and NVIDIA IGX or NVIDIA AGX – into the medical robotics field, integrating a complete development technology stack. It provides a comprehensive set of tools and foundational building blocks, including:

End-to-end sample workflows (surgical and imaging)

High-fidelity medical sensor simulation

A catalog of simulation-ready assets (robots, tools, anatomical structures)

Pre-trained AI models and policy baselines

Synthetic data generation workflows

Based on this architecture, it is possible to seamlessly transition from simulation directly to clinical deployment without changing the underlying infrastructure.

How the Remote Surgery Workflow Works

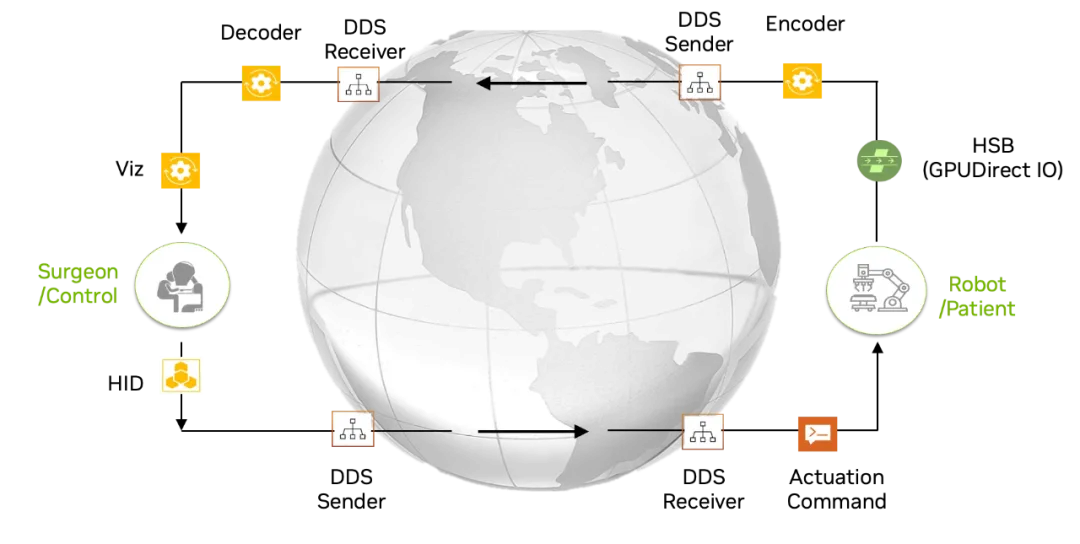

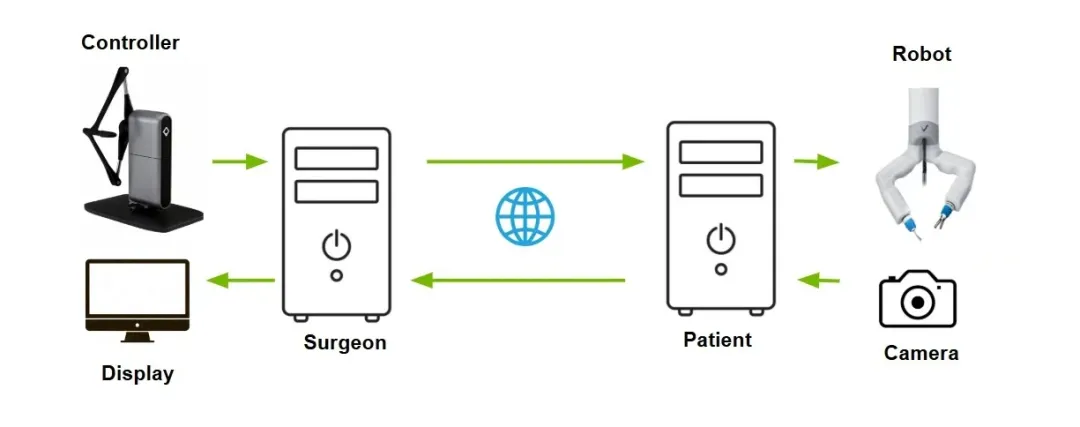

The remote surgery workflow connects a surgeon's control station to a patient-side surgical robot via a high-speed network.

Surgeon Side: The surgeon observes the procedure through multiple camera feeds (providing both an overview and a robotic perspective) and issues commands via an Xbox controller or a haptic feedback controller.

Patient Side: Cameras capture the live surgical site, and the robot executes precise actions based on the surgeon's commands.

Simulation Mode: An identical setup is replicated in NVIDIA Isaac Sim, enabling safe training and skill transfer.

This allows clinicians to perform surgeries in critical situations, in remote hospitals, or even across continents without compromising response speed.

Figure 1. Remote Surgery Workflow Diagram

System Architecture

Component | What It Does | Why It Matters |

|---|---|---|

GPU Direct Sensor IO | Streams video directly to the GPU via the NVIDIA Holoscan Sensor Bridge | Enables ultra-low-latency integration for cameras/sensors |

Video Streaming | Captures multiple camera feeds (robot + room) with hardware acceleration | Delivers high-quality video with near-zero latency |

RTI Connext DDS | Manages video, control, and telemetry data across domains with QoS control | Ensures secure and reliable medical-grade communication |

Control Interface | Supports Xbox controller or Haply Inverse3 haptic device | Provides familiar tools with force feedback up to 3.3N |

Cross-Domain Management with QoS for Video, Control, & Telemetry | Handles pose reset, tool homing, and dead zones | Guarantees safe recovery in clinical scenarios |

Next, we will delve into the technical details to understand the architecture behind this solution.

1. GPUDirect Sensor IO

The system utilizes NVIDIA Holoscan Sensor Bridge (HSB) to stream video directly to the GPU for real-time processing. HSB's FPGA-based interface connects high-speed sensors via Ethernet, enabling low-latency data transmission. This simplifies the integration of sensors and actuators in edge AI medical applications.

2. Video Streaming

The system simultaneously captures two camera feeds: an overall view of the room and a detailed robotic perspective. Video encoding is accelerated using NVIDIA hardware to ensure image quality is maintained while minimizing latency. The encoding can be selected between H.264 (better compatibility) or NVJPEG (lowest latency).

Multi-Camera Support:

Simultaneously captures video feeds from both robot-mounted cameras and room overview cameras (compatible with RealSense or CV2).

Hardware-Accelerated Encoding:

NVIDIA Video Codec (NVC) supports H.264 or H.265, ideal for bandwidth-constrained scenarios.

NVJPEG Encoding: Ultra-low-latency option with adjustable quality (1-100).

3. Communication Layer:

RTI Connect DDS (Data Distribution Service) handles all inter-site data transmission, ensuring medical-grade reliability, low latency, and data integrity. Video streams, control commands, and robot feedback travel on separate channels, each optimized for its specific needs.

RTI Connect DDS Infrastructure:

Secure, medical-grade reliability with guaranteed message delivery

Domain isolation:

for video, control commands, and telemetry

Time synchronization :

via optional NTP server integration

Network optimization:

with auto-discovery and QoS profiles

4. Control Interface:

Surgeons can use:

Xbox controller for basic operation

Haply Inverse3 for intuitive 3D robotic control with force feedback

Dual Control Modes:

Cartesian mode: Direct X-Y-Z positioning for intuitive control

Polar mode: Joint-space control for complex maneuvers

Input Devices:

Xbox controller: Dual joysticks for simultaneous MIRA arm control

Haply Inverse3: Up to 3.3N force feedback for realistic tissue interaction

Safety Features:

Automatic pose reset、Tool tracking sequences and Configurable dead zones

Figure 2. Remote Surgery System Architecture Diagram

Pre-Surgery Verification: Latency Benchmarks

Low latency is critical for remote surgery. The following benchmark results demonstrate that this workflow meets clinical requirements.

HSB with IMX274 Camera

Using the NVIDIA HSB board and IMX274 MIPI camera, an ultra-low latency workflow is achieved.

HDMI Camera with YUAN HSB Board

Medical facilities often use cameras with HDMI or SDI outputs. In such cases, the YUAN HSB board is an excellent solution. It captures video from HDMI or SDI and transmits the data directly to the GPU. The HDMI camera used in this benchmark is the Fujifilm X-T4.

Display benchmarks were conducted using a G-Sync-compatible monitor at 240Hz refresh rate in Vulkan exclusive full-screen mode. Latency measurements were captured using the NVIDIA Latency and Display Analysis Tool (LDAT).

HSB with IMX274 Camera

1080p@60fps (H.264, 10 Mbps bitrate)

Photon-to-screen latency: 35.2 ± 4.77 ms

Encoding and decoding: 10.58 ± 0.64 ms

4K@60fps (H.265, 30 Mbps bitrate)

Photon-to-screen latency: 44.2 ± 4.38 ms

Encoding and decoding: 14.99 ± 0.69 ms

The Holoscan Sensor Bridge is available through ecosystem FPGA partners Lattice and Microchip.

It is important to emphasize that both configurations achieve latencies of approximately 50 ms, which is sufficiently fast to support safe remote operations.

Deployment Flexibility

Thanks to its containerized technology, this workflow ensures consistent performance across different environments:

Real Operating Room: Connects to actual cameras and robots for live surgeries.

Plug-and-play integration with existing surgical infrastructure

Supports multiple camera types: Intel RealSense, standard USB cameras, MIPI cameras with HSB boards, and HDMI or SDI cameras with YUAN HSB boards

Direct control of the MIRA robot using game controllers or Haply Inverse3 devices

Enables sterile on-site operations by isolating remote operators

Simulation Environment: Uses NVIDIA Isaac Sim for training without risking patient safety.

NVIDIA Isaac Sim integration provides realistic surgical scenarios

Risk-free training with accurate physics and tissue modeling

Skill assessment tools track precision, speed, and technique

On-site recording and playback for review and improvement

Both deployment modes use the same control schemes and network protocols, ensuring that skills developed in simulation can be directly transferred to real-world applications. The platform’s modular design allows institutions to start with simulation-based training and seamlessly transition to live surgeries when ready.

Clinical Impact

Early pilot deployments have shown positive outcomes:

Emergency surgery patient referral time reduced by 50%

Access to specialized surgical care in remote areas increased by 3x

Surgical training efficiency improved by 40% through simulation

Zero device latency-related complications in over 1,000 procedures

Building the Future of Surgery

Remote surgery is not just a workflow—it is a foundational element for a new model of healthcare. It goes beyond architectural design, offering engineering-driven solutions to address global gaps in medical care.

Experts can perform surgeries on patients regardless of location

Trainees can practice in simulation before engaging with real patients

Hospitals can extend scarce specialized resources without costly referrals

Powered by a reliable, low-latency workflow that connects simulation environments to operating rooms, NVIDIA Isaac for Healthcare makes this vision a reality for developers.

Establishing the remote surgery workflow

git clone https://github.com/isaac-for-healthcare/i4h-workflows.git

cd i4h-workflows

workflows/telesurgery/docker/real.sh build

After that, the camera can be connected, DDS can be configured, and attempts can be made to control the robot.

Start the development work immediately. You can replicate the code repository, try to adapt to new control devices, integrate the new imaging system, or conduct your own tests on the latency benchmark. Every contribution is moving remote surgery closer to daily application.

Documents and codes

Isaac for Healthcare related documents:

https://isaac-for-healthcare.github.io/i4h-docs/

Isaac for Healthcare Workflow - Sample Workflows and Applications:

https://github.com/isaac-for-healthcare/i4h-workflows

Isaac for Healthcare Sensor Simulation - Based on Physical Sensors:

https://github.com/isaac-for-healthcare/i4h-sensor-simulation

Isaac for Healthcare Asset Catalogue – Healthcare Assets:

https://github.com/isaac-for-healthcare/i4h-asset-catalog

Related projects

Isaac Sim – Robot Simulation Platform:

https://github.com/isaac-sim

Holoscan SDK - Edge AI Platform:

https://github.com/nvidia-holoscan

MONAI - Medical Imaging AI:

https://github.com/Project-MONAI

Community

Omniverse Discord - Join the #isaac-for-healthcare channel:

https://discord.gg/nvidiaomniverse

GitHub Issues – Submit error reports and feature requests:

https://github.com/isaac-for-healthcare