Shanghai ZENTEK Co., Ltd. ZENTEK 信弘,智能,信弘智能科技 Elite Partner,Omniverse,智能科技 NVIDIA GPU,NVIDIA vGPU,TESLA,QUADRO,AI, AI Training,AI Courses,Artificial Intelligence (AI),Solutions,DLI,Mellanox,InfiniBand (IB),Deep Learning, NVIDIA RTX,IT,RACLE Database,ORACLE Cloud Services,Deep Learning Institute, bigdata,Big Data, Data Security & Backup,鼎甲SCUTECH CORP,High-Performance Computing (HPC),Virtual Machines (VM), Virtual Desktop Infrastructure (VDI),Virtual Desktop Infrastructure (VDI),Hardware,Software, Accelerated Computing,High-Performance Computing (HPC),Supercomputing,Servers,Virtual Servers, IT Consulting,IT System Planning, Application Deployment,System Integration

【Thor Hot Pre-sale is On】What is NVIDIA's Three-Computer Solution?

Great News!

ZENTEK is now launching the pre-sale of NVIDIA Jetson AGX Thor!

You can inquire about details through the [Contact Customer Service] section in the bottom menu bar of the ZENTEK official WeChat account.

Alternatively, you can reach us via:

Email: hello@zentek.com.cn

Phone: 177 4088 9963

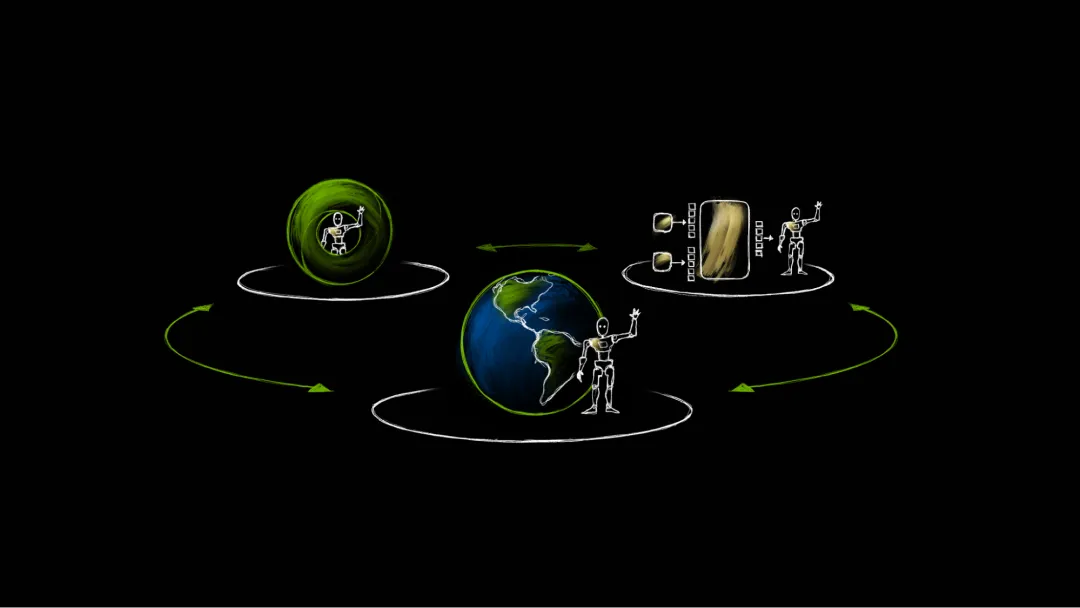

NVIDIA DGX, NVIDIA Omniverse and Cosmos based on NVIDIA RTX PRO servers, and Jetson AGX Thor are fully accelerating the development of physics-based AI systems—from humanoid robots to robotic factories—spanning the entire workflow of training, simulation, and inference.

Physics-based AI—the practical application of AI in autonomous systems operating in the real world, such as robots, visual AI agents, warehouses, and factories—is entering a breakthrough era.

To empower developers in building efficient physics-based AI systems in fields like transportation, industrial manufacturing, logistics, and robotics, NVIDIA has launched a three-computer solution, fully advancing the development of physics-based AI in training, simulation, and inference.

What are NVIDIA’s Three Computers?

NVIDIA’s three-computer solution consists of three core components:

NVIDIA DGX AI Supercomputer for AI training;

NVIDIA Omniverse and Cosmos (based on NVIDIA RTX PRO servers) for simulation;

NVIDIA Jetson AGX Thor for inference, deployed on robots.

This architecture forms a complete lifecycle development loop for physics-based AI, covering everything from model training to deployment.

What is Physics-based AI, and Why is it Important?

Unlike agent-based AI that operates in digital environments, physics-based AI is an end-to-end model capable of comprehensively perceiving the physical world, performing real-time inference, interacting proactively, and navigating autonomously.

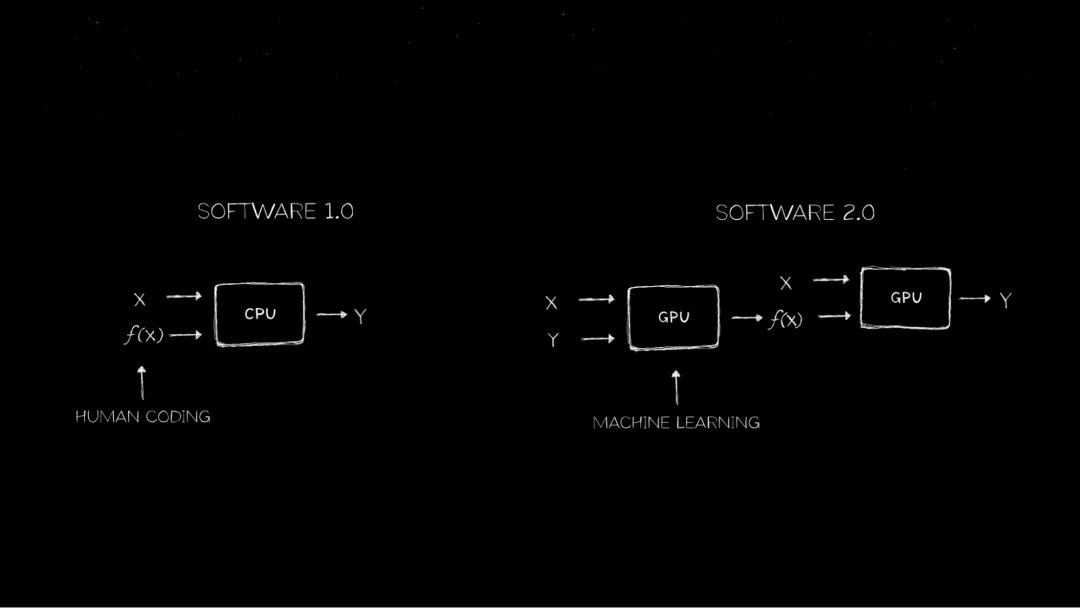

For 60 years, "Software 1.0"—serial code written by human programmers—ran on general-purpose computers powered by CPUs.

Then, in 2012, Alex Krizhevsky (under the guidance of Ilya Sutskever and Geoffrey Hinton) developed the revolutionary deep learning model for image classification, AlexNet, which won the ImageNet Computer Image Recognition Competition.

This marked the industry’s first major encounter with AI. The breakthrough in machine learning—running neural networks on GPUs—officially ushered in the era of Software 2.0.

Today, software can write software itself. Computing workloads worldwide are shifting from general-purpose computing on CPUs to accelerated computing on GPUs—a transition that has long outpaced Moore’s Law.

With generative AI, trained multimodal transformers and diffusion models can generate responses.

Large language models (LLMs) are inherently one-dimensional, predicting the next token based on patterns like letters or words. Image and video generation models, by contrast, are two-dimensional, predicting the next pixel.

None of these models can understand or interpret the three-dimensional world. This is where physics-based AI comes into play.

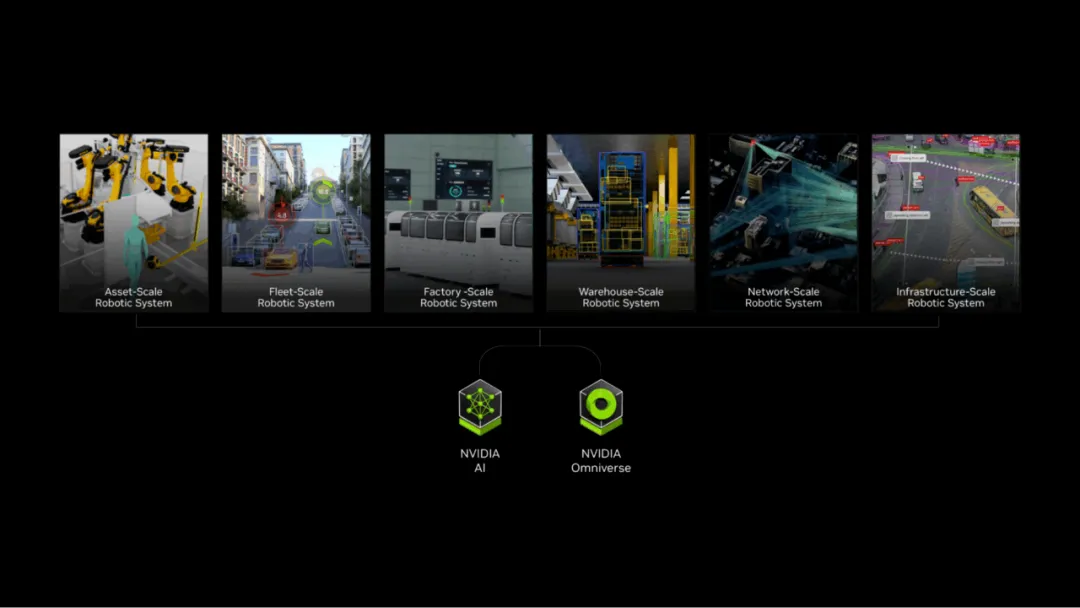

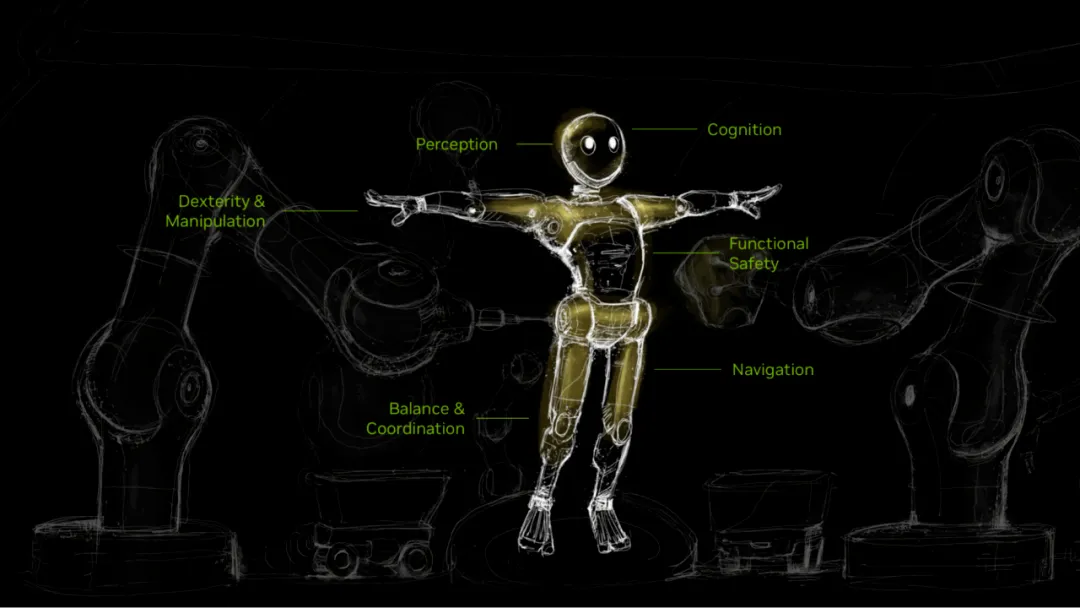

A robot is a system capable of perceiving, reasoning, planning, acting, and learning. Typically, people think of autonomous mobile robots (AMRs), robotic arms, or humanoid robots as "robots," but there are many other forms of robots.

In the near future, any entity that can move or monitor moving objects could become an autonomous robotic system—equipped with the ability to perceive its environment and respond accordingly.

From self-driving cars and operating rooms to data centers, warehouses, factories, even traffic control systems and entire smart cities—everything will shift from static, human-operated systems to autonomous, interactive systems driven by physics-based AI.

Why are Humanoid Robots the Next Frontier?

Humanoid robots represent an ideal general-purpose robotic form because they can operate efficiently in environments designed for humans, while requiring minimal adjustments during deployment and operation.

According to Goldman Sachs, the global humanoid robot market is projected to reach **$38 billion by 2035**—more than six times the approximately $6 billion forecast just two years ago.

Researchers and developers around the world are racing to build the next generation of robots.

How Do NVIDIA’s Three Computers Collaborate to Advance Robotics?

Robots learn to understand the physical world through three distinct types of computational intelligence, each playing a critical role in the robot development process.

Training Computer: NVIDIA DGX

Training robots to understand natural language, recognize objects, and plan complex movements requires massive computing power—capabilities that only specialized supercomputing infrastructure can provide. Thus, the training computer is indispensable.

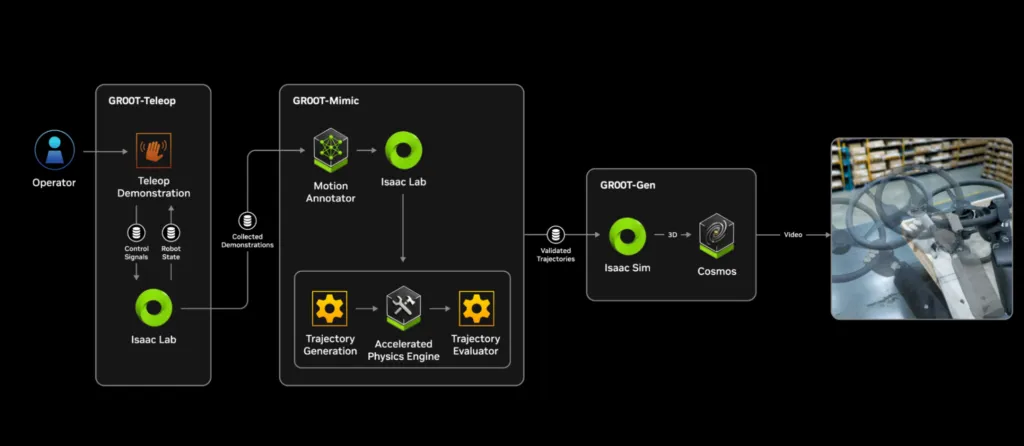

Developers can pre-train their own robot foundation models on the NVIDIA DGX platform. Alternatively, they can use the NVIDIA Cosmos Open World Foundation Model or NVIDIA Isaac GR00T Humanoid Robot Foundation Model as baseline models, and fine-tune new robot policies on top of them.

Simulation and Synthetic Data Generation Platform: Omniverse and Cosmos (based on NVIDIA RTX PRO Servers)

The biggest challenge in developing general-purpose robots is the data gap. Researchers working on large language models have the advantage of massive amounts of internet data for pre-training—but no such resource exists in the field of physics-based AI.

Real-world robot data is not only limited in quantity, costly to acquire, and difficult to collect (especially for edge cases beyond pre-training coverage), but also time-consuming to gather—making it expensive and hard to scale.

With Omniverse and Cosmos, developers can generate massive volumes of diverse, physics-based synthetic data (e.g., 2D/3D images, segmentation masks, depth maps, or motion trajectory data), laying the foundation for model training and performance optimization.

To ensure the safety and high performance of robot models before real-world deployment, developers must conduct simulation testing in a digital twin environment. NVIDIA Isaac Sim—built on Omniverse and running on NVIDIA RTX PRO servers—enables developers to validate their robot policies in a risk-free simulated environment. Here, robots can repeatedly attempt tasks, learn from mistakes, and avoid endangering human safety or causing costly hardware damage.

Researchers and developers can also use NVIDIA Isaac Lab—an open-source robot learning framework that advances reinforcement learning and imitation learning for robotics—helping to improve the efficiency of robot policy training.

Runtime Computing Platform: NVIDIA Jetson AGX Thor

For safe and efficient deployment, physics-based AI systems require a computer that supports the robot’s real-time autonomous operation. This computer must have powerful computing capabilities to process sensor data, perform inference, plan actions, and execute movements—all within milliseconds.

The inference computer on a robot needs to run multimodal AI inference models to enable the robot to interact with humans and the physical world in real time and intelligently. Designed with a compact form factor, Jetson AGX Thor not only meets the on-board AI performance and energy efficiency requirements but also supports the coordinated operation of multiple models (e.g., control policies, computer vision, and natural language processing).

How Do Digital Twins Accelerate Robot Development?

Robotic facilities are the product of integrating all these technologies.

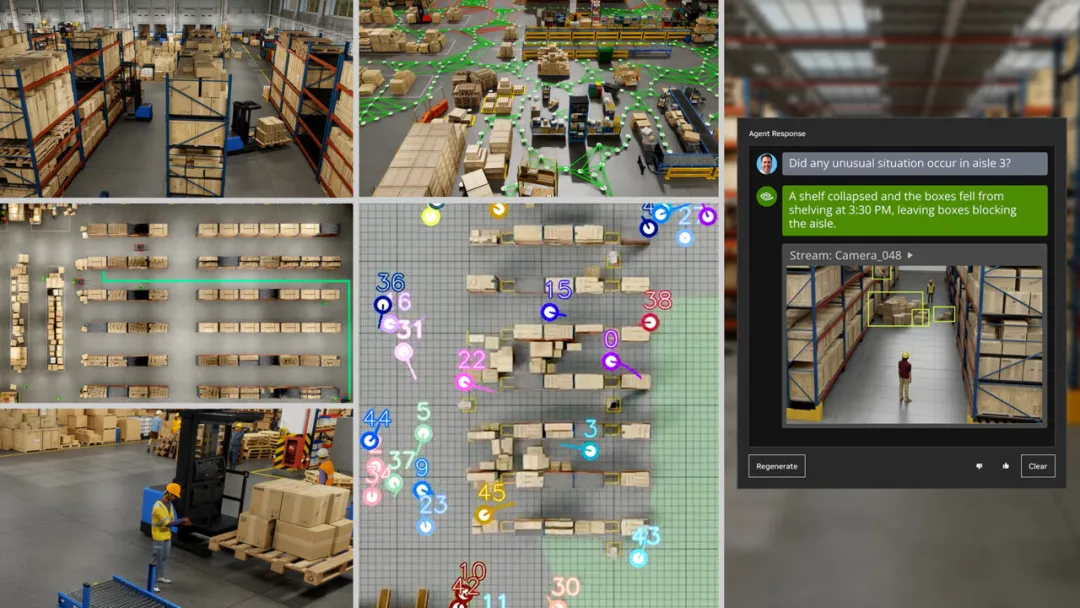

Manufacturing companies like Foxconn and logistics firms like Amazon Robotics can coordinate teams of autonomous robots to work alongside human employees, while monitoring factory operations via hundreds or thousands of sensors.

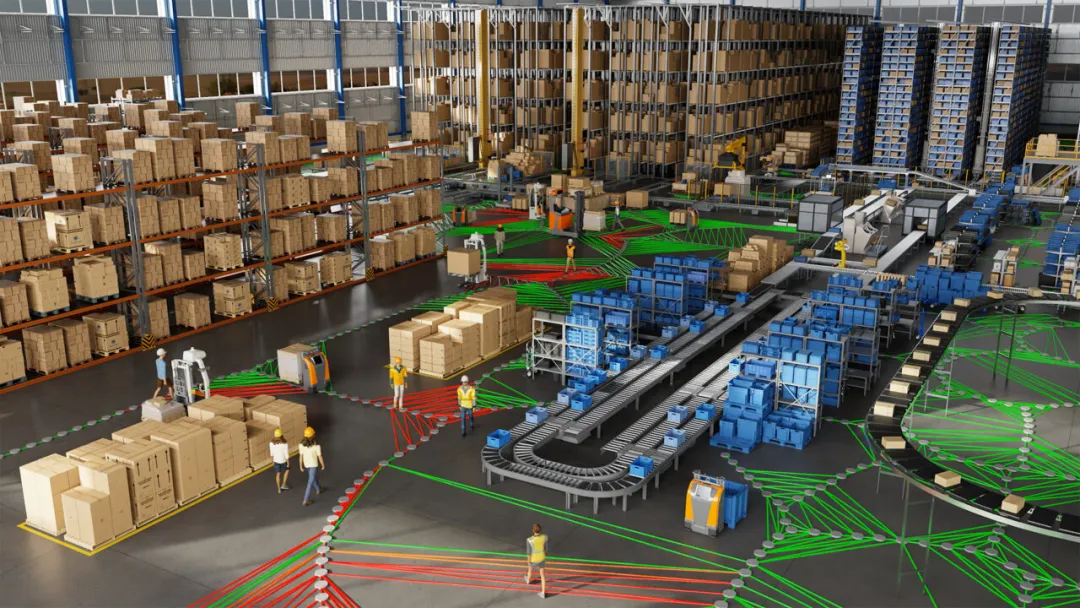

These autonomous warehouses, workshops, and factories will be equipped with digital twin systems—used for layout planning and optimization, operation simulation, and most importantly, software-in-the-loop testing of robot fleets.

Mega—built on the Omniverse platform—is a blueprint for factory digital twins. It allows industrial enterprises to test and optimize robot fleets in a simulated environment before deploying them to physical factories. This helps ensure seamless integration, optimal performance, and minimal disruption.

Mega enables developers to place virtual robots (along with their AI models, or "robot brains") into their factory digital twins. Within the digital twin, robots perform tasks by perceiving the environment, making decisions, planning next actions, and ultimately executing predefined movements.

These movements are simulated in the digital environment via the world simulator in Omniverse. The results are then perceived by the robot’s brain through Omniverse’s sensor simulation.

Through sensor simulation, the robot’s brain determines the next action—and the cycle repeats. Meanwhile, Mega accurately tracks the real-time status and position of all elements within the factory digital twin.

This advanced software-in-the-loop testing method allows industrial enterprises to simulate and validate changes within the safety of the Omniverse digital twin, helping them predict and resolve potential issues to reduce risks and costs during actual deployment.

Which Enterprises are Using NVIDIA’s Three-Computer Solution?

NVIDIA’s three-computer solution is accelerating the work of robotics developers and robot foundation model builders worldwide:

Teradyne’s Universal Robots (UR) leverages NVIDIA Isaac Manipulator, Isaac acceleration libraries, AI models, and NVIDIA Jetson Orin to build the UR AI Accelerator—a turnkey hardware and software toolkit that enables collaborative robot developers to build applications, speed up development, and shorten the time-to-market for AI products.

RGo Robotics uses NVIDIA Isaac Perceptor to help its wheel.me autonomous mobile robots operate anywhere, anytime. By equipping them with human-like perception capabilities and visual-spatial awareness, RGo enables the robots to make informed decisions.

Humanoid robot manufacturers including 1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Fourier Intelligence, Galaxy General Robotics, Mentee Robotics, Sanctuary AI, Unitree Robotics, and XPeng Robotics are adopting NVIDIA’s robotics development platform.

Boston Dynamics uses Isaac Sim and Isaac Lab to build quadruped robots, and Jetson Thor to develop humanoid robots—aimed at boosting human productivity, addressing labor shortages, and prioritizing warehouse safety.

Fourier Intelligence leverages Isaac Sim to train humanoid robots for use in fields requiring high levels of interaction and adaptability, such as scientific research, healthcare, and manufacturing.

Galaxy General Robotics uses Isaac Lab and Isaac Sim to develop DexGraspNet—a large-scale dataset for robotic dexterous grasping (applicable to various dexterous robotic hands) and a simulation environment for evaluating dexterous grasping models. It also uses Jetson Thor to achieve real-time, precise control of dexterous hands.

Field AI uses the Isaac platform and Isaac Lab to develop risk-constrained, multi-task, multi-purpose foundation models—enabling robots to operate safely in outdoor field environments.

Future Outlook for Physics-based AI Across Industries

As industries worldwide expand the application of robotics, NVIDIA’s three-computer solution for physics-based AI demonstrates tremendous potential to better assist humans in work across sectors including manufacturing, logistics, services, and healthcare.

To learn more about the NVIDIA Robotics Platform and start full-lifecycle development (training, simulation, and deployment) of physics-based AI:

https://www.nvidia.com/en-us/industries/robotics/

The order of enterprise names in this article does not imply priority.