上海信弘智能科技有限公司,信弘,智能,信弘智能科技,Elite Partner,Omniverse,智能科技,NVIDIA GPU,NVIDIA DGX, vGPU,TESLA,QUADRO,AI,AI培训,AI课程,人工智能,解决方案,DLI,Mellanox,IB, 深度学习,RTX,IT,ORACLE 数据库,ORACLE云服务,深度学习学院,bigdata,大数据,数据安全备份,鼎甲,高性能计算, 虚拟机,虚拟桌面,虚拟软件,硬件,软件,加速计算,HPC,超算,服务器,虚拟服务器,IT咨询,IT系统规划,应用实施,系统集成

Jetson Thor vs. AGX Orin: 7.5 times more computing power, 3.5 times better energy efficiency. What makes NVIDIA's new generation of robot "brain" stand out?

Editor's Recommendation:

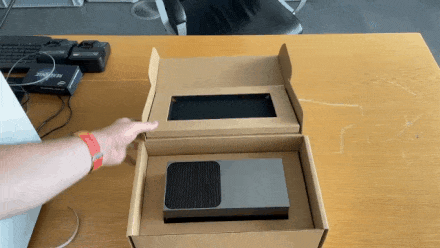

The Thor Developer Kit is now available for pre-order! Developers and enterprise users can prioritize their orders through ZENTEK to get an early experience of the next-generation computational leap in physical AI!

In the rapidly evolving field of robotics, the computing module, as the "brain" of robots, directly determines the functional boundaries and operational efficiency of devices. NVIDIA's Jetson Thor Developer Kit, with its ultra-high performance based on the Blackwell architecture, flexible deployment design, and extensive ecosystem support, is positioned as the "most powerful robotics computing core currently available." It can effortlessly power humanoid robots, mobile robots, or autonomous driving devices, bringing a breakthrough experience to robotics development. This article will provide a comprehensive analysis of Jetson Thor's technical advantages and value from dimensions such as hardware specifications, design details, functional features, application scenarios, and product comparisons.

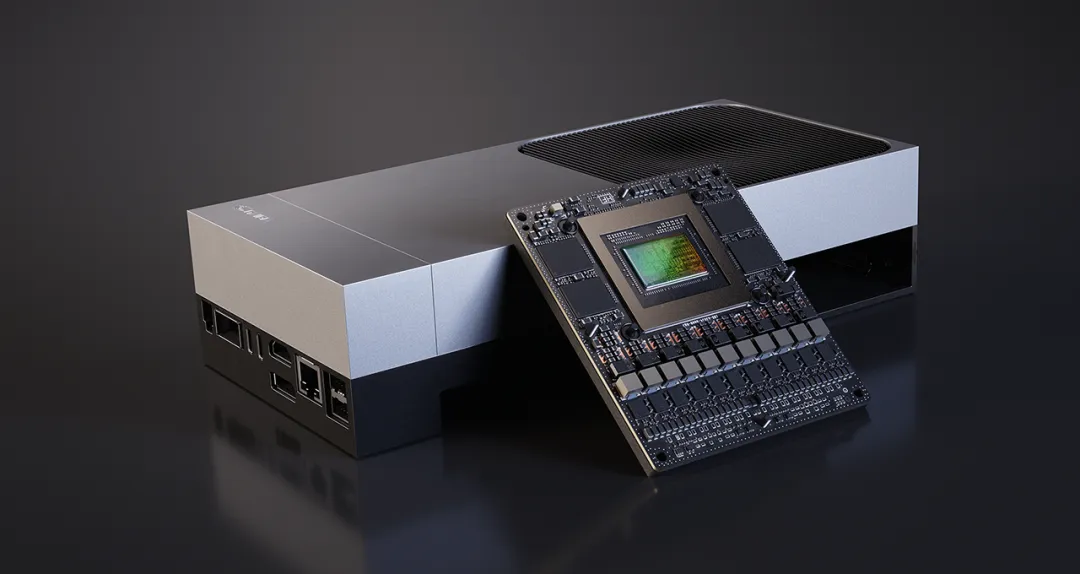

1. Core Hardware: A Computing Beast Powered by Blackwell Architecture

Jetson Thor's core competitiveness stems from its top-tier hardware configuration, designed for high-load AI tasks and robotics control scenarios. Its NVIDIA Blackwell architecture GPU integrates 2,560 CUDA cores and 96 Tensor cores. The CUDA cores provide robust computational support for general-purpose computing, efficiently handling parallel tasks such as robot motion control and sensor data parsing. The Tensor cores are optimized for AI inference and training, excelling in deep learning scenarios like computer vision and natural language processing, providing the underlying power for robot "perception" and "decision-making."

On the CPU side, Thor features a 14-core ARM processor, balancing multi-tasking capability and energy efficiency. It can simultaneously run multiple processes such as the robot operating system (e.g., ROS), sensor data acquisition, and motion planning without computational bottlenecks. The memory configuration breaks industry conventions with 128GB of high-speed memory, sufficient to support the local operation of ultra-large-scale AI models (e.g., ten-billion-parameter language models) without relying on cloud computing, avoiding network latency issues in real-time robot control.

From a performance comparison perspective, Jetson Thor achieves a leapfrog improvement over its predecessor, Jetson AGX Orin: AI performance is 7.5x that of Orin, enabling it to handle more complex multimodal perception tasks (e.g., simultaneously parsing data from multiple cameras and LiDAR). Energy efficiency is 3.5x higher, meaning Thor consumes less power for the same computational output, making it more suitable for the endurance requirements of mobile devices like robots. Official data shows that its sustained computational output can stably support the joint control, environmental perception, and decision-making reasoning of entire humanoid robots,彻底打破ing the industry perception that "mini computing modules cannot power complex robots."

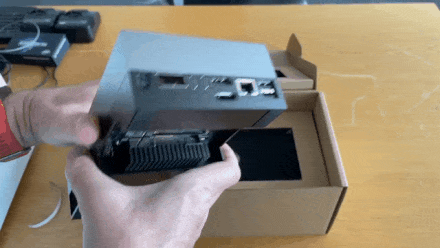

2. Design Details: Balancing Compact Form Factor and Flexible Thermal Management

As a developer kit for robotics, Jetson Thor's form factor fully considers practical deployment needs, adopting a "core module + carrier board" combination. The core Jetson module measures just 100mm (L) × 87mm (W) × 15mm (H), similar in size to the previous-generation Orin, allowing it to be easily embedded into compact spaces like robot torsos or joints. The carrier board integrates all external interfaces and expansion components, facilitating direct testing or rapid integration into robot systems.

1. Thermal System: Ensuring Stability Under High Load

Thor's thermal design is key to its sustained high-performance operation. The developer kit features a large heatsink + heat pipes + dual fans: the heatsink covers the core heat-generating areas, with a total surface area far exceeding Orin's thermal components, and incorporates multiple heat pipes to quickly conduct heat from the GPU and CPU to the fins. The bottom-hidden fans (located below the fins) are designed for low noise, providing strong airflow while avoiding interference with the robot's operating environment.

More innovatively, Thor supports "flexible thermal modification"—developers can remove the kit's built-in heatsink and fan and directly attach the Jetson core module to the robot's metal chassis (base/body), utilizing the chassis for passive cooling. This design not only reduces the module's weight by approximately 30% (crucial for load control in mobile robots) but also avoids computational throttling due to fan failures, making it particularly suitable for industrial robots or humanoid robots with extremely high stability requirements.

2. Interface Configuration: Centralized Design and High-Speed Expansion

Thor's interface layout is optimized, with all external interfaces concentrated on one side of the carrier board, significantly simplifying the wiring process inside robots and reducing integration difficulty. Its core interfaces include:

Power Interface: Supports USB-C power delivery (up to 240W), compatible with mainstream industrial power supplies, while the USB-C interface can also handle data transmission;

Display Interfaces: Equipped with HDMI 2.1 and DisplayPort for connecting external screens to view the robot's perspective or debugging interface in real-time;

Network and High-Speed Interfaces: One 10G Ethernet port for常规 data transmission, and one QSFP28 high-speed interface (theoretical rate 200Gbps) is a highlight—it can directly connect to high-bandwidth devices (e.g., multi-channel cameras, high-speed motor controllers), enabling low-latency transmission of sensor data and control commands, compensating for the lack of additional M.2 slots;

Expansion Interfaces: Integrated Wi-Fi and Bluetooth modules (antennas require separate installation), supporting wireless debugging and data transmission; multiple USB ports and a debugging USB port for connecting peripherals like keyboards and U disks.

However, compared to the previous-generation AGX Orin, Thor has some interface simplifications: it removes the GPIO 40 interface and dedicated camera interface, which may affect compatibility with traditional GPIO-controlled simple sensors (e.g., infrared distance measurement modules) or older cameras. But with the high-speed expansion capability of QSFP28, developers can use adapter boards to connect multiple devices, somewhat mitigating the limitations of interface types.

3. Core Functions: Full Workflow Support from Simulation to Deployment

Jetson Thor is not just a computational Carrier; through its software ecosystem and functional design, it covers the entire "simulation-debugging-deployment" workflow of robotics development, particularly excelling in scenarios like hardware-in-the-loop (HIL) testing, AI model deployment, and multimodal data processing.

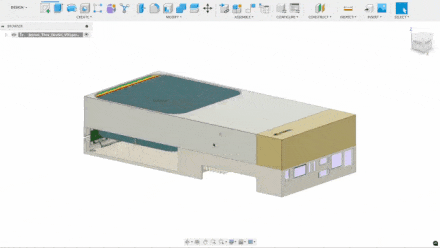

1. Hardware-in-the-Loop (HIL): The "Bridge" Between Simulation and Reality

In robotics development, "software-in-the-loop (SIL)" can only verify algorithm logic, while "hardware-in-the-loop" simulates real hardware operating environments, significantly reducing deployment risks. Thor's HIL solution has a clear process:

Simulation End: Run Isaac Sim (NVIDIA's robot simulation platform) on a workstation (PC) to build a virtual robot environment and generate sensor data like camera images and joint states.

Computing End: Thor communicates with the workstation via local Wi-Fi, receives sensor data generated by the simulation, and runs AI policy models (e.g., Groot vision-language-action models).

Closed-Loop Control: Thor parses the data and outputs joint control commands (e.g., new motor positions), feeds them back to the workstation; the simulation platform updates the virtual robot's state based on the commands, generating new sensor data, forming a closed loop.

In this mode, Thor fully simulates the role of the real robot's "brain." From its perspective, there is no essential difference between simulated data and real sensor data. Developers can use HIL testing to optimize algorithms (e.g., adjust the action accuracy of the Groot model), avoiding the risk of hardware damage from debugging directly on real robots. The documented "nut sorting task" demonstration is based on this solution: the simulation randomly changes container positions, Thor runs the Groot model to parse camera images, autonomously decides robotic arm actions, achieving a success rate that meets industry practical standards.

2. AI Model Deployment: From Visual Decision-Making to Language Interaction

Thor has strong compatibility with mainstream AI models and can directly deploy multiple key models:

Groot Vision-Language-Action Model: As the core model for vision-action mapping, Groot can receive camera images (e.g., visual information of nuts and containers) combined with natural language instructions (e.g., "pour the nuts from the red container into the yellow container") to generate robotic arm joint control commands, achieving "what you see is what you get" task execution.

Video Analytics Models: Support real-time object detection and anomaly recognition. The documentation demonstrates a "factory safety monitoring" scenario—the model can detect targets like personnel, ladders, and safety helmets in video, automatically identify dangerous behaviors like "standing on a ladder without a safety helmet," and generate alerts with response latency below 100ms.

Large Language Models (LLMs): Support local operation of large-parameter models like Llama 3.3 (70 billion parameters) and Llama 3.2 (3 billion parameters). Tests show that the 70B parameter model, while slightly slower in response (single Q&A takes about 5-8 seconds), delivers highly accurate answers; the 3B parameter model improves response speed by 3x, meeting the needs of real-time language interaction for robots (e.g., voice command parsing).

3. Software Ecosystem: Out-of-the-Box Development Support

Thor comes pre-installed with an Ubuntu Linux-based system and is compatible with NVIDIA's full AI software stack: components like CUDA (computing power scheduling), cuDNN (deep learning acceleration), and TensorRT (inference optimization) are pre-installed, eliminating the need for manual environment setup. It also supports ROS 2 (Robot Operating System) and Isaac Lab (robot learning platform), seamlessly connecting to development tools for robot motion control and path planning. This "out-of-the-box" ecosystem design significantly shortens the cycle from hardware acquisition to algorithm deployment.

4. Product Comparison: Key Differences with Jetson AGX Orin

As two flagship products of the Jetson series, Thor and Orin have distinct positioning and characteristics, compared across the following dimensions:

Comparison Dimension | Jetson Thor | Jetson AGX Orin |

Core Architecture | Blackwell | Ampere |

AI Performance | ~7.5x Orin | Base flagship performance |

Energy Efficiency | ~3.5x Orin | Traditional energy efficiency |

Thermal Design | Large heatsink + heat pipes + removable mod | Standard heatsink + fan |

Interface Layout | Interfaces concentrated on one side, QSFP28 | Interfaces scattered, includes GPIO 40 & dedicated camera port |

Core Module Size | 100×87×15mm | Similar size |

Expansion Flexibility | Relies on QSFP28 high-speed expansion | Supports more traditional interface expansion |

Overall, Orin is more suitable for small to medium-sized robots (e.g., mobile robots, single-arm robotic arms), while Thor, with its stronger computing power, higher energy efficiency, and flexible thermal modifications, is better suited for complex devices like humanoid robots and multi-arm collaborative robots.

5. Advantages, Disadvantages, and Future Application Prospects

1. Core Advantages

Supercomputing Power and Efficiency: 128GB memory + Blackwell architecture supports local operation of ten-billion-parameter models, leading the industry in energy efficiency.

Deployment Flexibility: Compact core module, modifiable thermal design, adaptable to various robot installation scenarios.

Mature Ecosystem: Compatible with tools like Isaac Sim, ROS, and Groot, low development barrier.

High-Speed Expansion: QSFP28 interface meets high-bandwidth device connection needs, compensating for interface type limitations.

2. Areas for Improvement

Interface Simplification: Lack of GPIO 40 and dedicated camera interface may affect compatibility with traditional sensors.

Storage Expansion: No additional M.2 slots; high-speed storage expansion requires QSFP28 adapters.

3. Future Applications

From an industry perspective, Thor can also be widely applied in:

Industrial Humanoid Robots: Power multi-joint协同运动, handle precision assembly and material sorting tasks.

Autonomous Driving Vehicles: Parse LiDAR and camera data in real-time for path planning and obstacle avoidance.

Service Robots: Enable natural language interaction via LLMs, combined with visual models to complete tasks like home cleaning and object delivery.

Conclusion

NVIDIA Jetson Thor redefines the standard for the robot "brain" with its core features of "high performance, high flexibility, and high compatibility." It is not just a computing module but a key载体 connecting simulation and reality, algorithms and hardware. For developers, Thor lowers the barrier to complex robotics development, enabling full workflow verification from AI model training to hardware deployment without relying on expensive data center computing power. For the industry, the launch of Thor will accelerate the commercialization of products like humanoid robots and multimodal collaborative robots, speeding up the realization of "robots entering reality."

With the continuous improvement of the subsequent ecosystem (e.g., interface adapter solutions, dedicated algorithm optimization), Jetson AGX Thor is expected to become the "standard computing core" in the field of robotics development, powering more innovative applications.